News

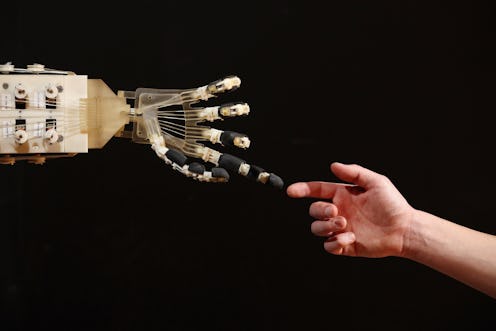

Could Artificial Intelligence Be Our Downfall?

The combined brain power of Stephen Hawking, Elon Musk, and Bill Gates would be an awesomely frightening thing to behold, but according to the Microsoft founder and former CEO, no human (or combination thereof) could ever be as terrifying as the machines we've built. When asked about the potential dangers presented by artificial intelligence, Bill Gates joined his fellow tech gurus in broadcasting his concerns about the unmapped potential of machinery, and questioned the naiveté of those who remain unperturbed by the growing power of technology. During his Wednesday Reddit "Ask Me Anything" session, Gates divulged a number of personal details, including his greatest fears, saying, "I am in the camp that is concerned about super intelligence ... and don't understand why some people are not concerned."

While films like iRobot and the Terminator franchise may only be a figment of science fiction today, Gates joins the ranks of other super intelligent (human) individuals in warning against their eventual evolution into a rather dangerous reality. Although today, machines do not possess the necessary intelligence to make them a viable threat, this might soon change as humans inadvertently devote more and more resources to making robots more personable, gradually giving them increasing levels of human-like brain power. And at some point, Gates and others warn, this could mean that robots would actually surpass homo sapiens in terms of dominance — the most literal sense of the student becoming the master.

Gates explained, "First the machines will do a lot of jobs for us and not be super intelligent. That should be positive if we manage it well. A few decades after that though the intelligence is strong enough to be a concern." Already, the billionaire believes that robots and other machines have made leaps and bounds of progress, and that this development will grow exponentially in the coming decades. And while some of these advancements will benefit the human race, others might hold more sinister possibilities. Said Gates:

There will be more progress in the next 30 years than ever. Mechanical robot tasks like picking fruit or moving a hospital patient will be solved. Once computers/robots get to a level of capability where seeing and moving is easy for them then they will be used very extensively.

Much of the danger in artificial intelligence, Gates and others agree, is the unchecked possibility that remains unexplored by humans. Indeed, we are not, and perhaps will never be fully aware of the potential machines have, and may be unable to control what we create. Musk, who despite his Ironman-esque space pursuits, has warned repeatedly of the dangers inherent to artificial intelligence, even going so far as to say, "With artificial intelligence we are summoning the demon."

Musk is so worried about the future of machines that he recently invested $10 million in an effort to maintain positive relationships with robots, or rather, to keep artificial intelligence "friendly." Calling AI our "biggest existential threat" that could be "potentially more dangerous than nukes," the Tesla and SpaceX CEO gave the huge sum to the Future of Life Institute in order to fund grants that would further investigate and prevent the ramifications of over-developed machinery.

Stephen Hawking, the brilliant physicist, has also voiced considerable concerns over the future of robots and AI. In a piece he co-wrote with Future of Life co-founder Max Tegmark and two other leading voices in the field, Hawking noted:

Recent landmarks such as self-driving cars, a computer winning at Jeopardy! and the digital personal assistants Siri, Google Now and Cortana are merely symptoms of an IT arms race fueled by unprecedented investments and building on an increasingly mature theoretical foundation. Such achievements will probably pale against what the coming decades will bring.

And while he admitted, "Success in creating AI would be the biggest event in human history," he also warned, "Unfortunately, it might also be the last, unless we learn how to avoid the risks." The future of AI, Hawking suggested, could bring such unintended consequences as machines that would have the capacity to outsmart financial markets, out-invent human researchers, out-manipulate human leaders, and develop weapons we cannot even understand. Indeed, Hawking wrote, "Whereas the short-term impact of AI depends on who controls it, the long-term impact depends on whether it can be controlled at all."

In an interview with the BBC, the physicist expressed further concerns:

I think the development of full artificial intelligence could spell the end of the human race. Once humans develop artificial intelligence it would take off on its own and redesign itself at an ever-increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete and would be superseded.

Of course, despite their concerns, none of these men are suggesting the absolute cessation of technology and technological developments — all of their careers (and Hawking's livelihood), after all, are contingent on the continuous improvement of machinery and robotics. Gates himself juxtaposed his comments about the dangers of AI with the announcement of a new Microsoft project known as the "Personal Agent," which The Washington Post explains, is "being designed to help people manage their memory, attention and focus."

Ultimately, it seems, the main concern is the responsible development of artificial intelligence, and the maintenance of a certain sense of humility when it comes to dealing with such untapped potential. Otherwise, as Louis Del Monte, another noted physicist and entrepreneur worries, machines could indeed become the single most dominant species on planet Earth.

Images: Getty Images (4)